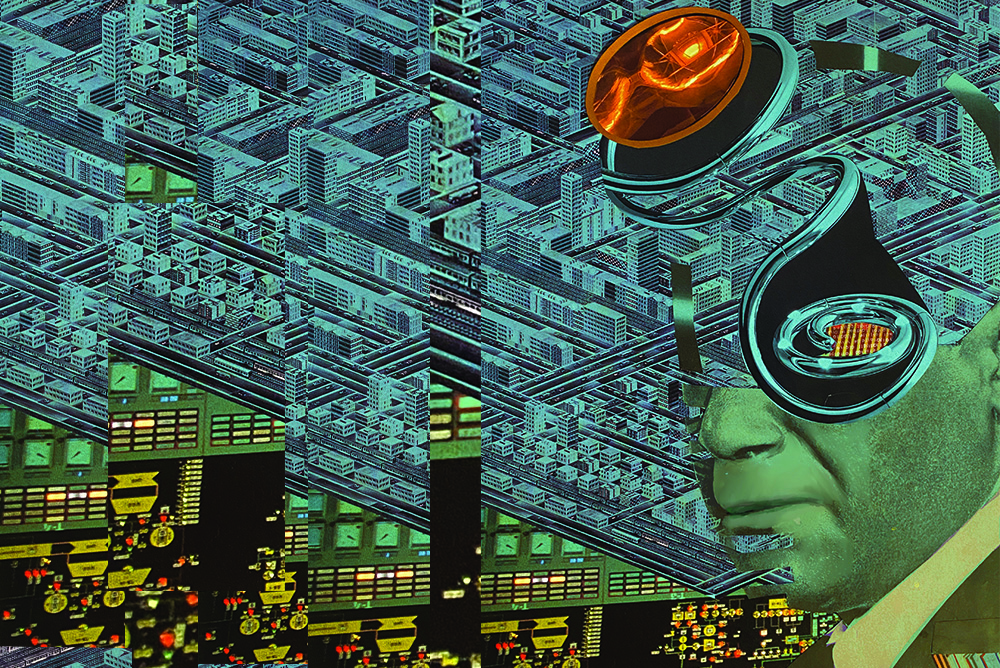

What do we give up when we incorporate tracking devices into our lives—and our bodies? Scholars M.G. Michael, Katina Michael, and Roba Abbas reflect on “uberveillance.” Illustration by Jason Lord.

The smartphone has become a modern Swiss Army knife: driver’s license, e-payment device, camera, radio, television, map, blood pressure monitor, workstation, babysitter, pocket AI, and general gateway to the internet. And now consumers are leaving their smartphones behind to sport lightweight smartwatches with equivalent functionality. With every update, our devices inch closer to us—our bodies, our minds. From the handheld, to the wearable, to the … What next?

Nearly 20 years ago, in 2006—before X, Amazon Web Services, iPhone, Fitbit, Uber, or ChatGPT—M.G. Michael was faced with a similar question. He was guest lecturing on the “Consequences of Innovation” at the University of Wollongong in Australia, focusing on emerging technologies in security. A student asked: “So then, where is all this surveillance heading?”

For a couple of years already, we had noticed hints of an ultimate destination in patents, pilots, and proposed products and services: chips implanted inside the human body to identify people and offer them digital services on demand. Hardware placed in the arm might let one pay at the checkout simply by waving a hand, or allow a first responder to scan a patient’s vital signs and medical records in an emergency. Such implants brought with them a perceived increase in security. They remained inside the body, hidden from view, and could not be stolen, or accidentally left behind.

M.G. searched for a word that would summarize what he was seeing emerge in these fields, and all around us. He imagined a coming together of Orwell’s Big Brother, microchip implants, radio frequency identification devices (RFID), Global Positioning Systems (GPS), apocalypticism, and Nietzsche’s idea of the ultimate, superior, progressed human form, the Übermensch. On the spot, he called it “uberveillance.” The neologism soon entered the lexicon.

Uberveillance is fundamentally an above and beyond, exaggerated, almost omnipresent 24/7 electronic surveillance. It is not only always on but also always with you. Like an airplane flight recorder, a personal “black box.” Or, if you prefer, it is like Big Brother on the inside looking out.

This kind of bodily and hyper-invasive monitoring is not risk-free, and won’t necessarily make us safer and more secure. Omnipresence in the physical world does not equate with omniscience. Despite their tremendous data gathering capacities, there is a real concern that implantable devices will breed misinformation, misinterpretation, and information manipulation, all of which may lead to misrepresentations of the truth.

In our original conception, uberveillance was multidimensional. A tiny RFID transponder, implanted in the arm, would connect with sensors such as accelerometers, gyroscopes, and magnetometers. Uberveillance would use GPS and other technologies to allow designated authorities to understand the who, where, when, why, what, and how. We imagined government authorities would use it in the context of civilian, commercial, or national security—as a find-me alert. For example, monitoring people living with dementia or on extended supervision orders; tracking suspects in crimes, parolees, or notable public figures or dignitaries; and allowing access to secured buildings or rooms.

Yet despite the perceived benefits, even in the early 2000s, we couldn’t ignore the sinister undertones. How far would this go? Was uberveillant technology too alluring—difficult to resist because of its ease of use? What if it did not always work as it should, proving subject to tampering, data bias, and inference?

Constructing a verifiable digital end-to-end cyber-physical-social reality is impossible. There is no substitute for real life. Recorded data—incomplete, from multiple sources and without necessary quality checks—are not always accurate. Global positioning coordinates may lack precision when tall buildings obstruct a line-of-sight between the handheld or wearable technology and satellites. There are black spots in networks when an individual leaves an urban space, or moves between locations.

Furthermore, uberveillant systems leave out context. An image of an altercation may seem to provide evidence that implicates an individual, but snapshots of moments prior may show that they were acting in self-defense. Near real time is not real time. This is the great flaw in uberveillance.

Without capturing context, an accurate chronicle of activity is unattainable. And a flawed chronicle of activity can be devastating. GPS coordinates with a lag may tie a user to a suspicious event; facial recognition algorithms may identify a passerby as an individual of interest; implants that have been spoofed may appear in multiple hit lists, cloaking the identity of the bona fide individual at a given location; and biometric data could be interpreted to indicate distress when a subject may simply have been in reflection.

Your cell service provider or smartwatch manufacturer might assure you they’ll only use your data for research. But they may also inform you they have no control over how their partners might use the biometric and other data downstream. Your wearable data could end up in an AI model one day, or used by a prospective employer during a hiring process, or be presented as evidence in a court of law. The wrong data might render you unemployable, uninsurable, and ineligible for government benefits. In an instant you could become persona non grata.

Uberveillance advances the idea of “us” versus a series of “thems”—data brokers, Big Tech companies, government agencies, hackers, secret intelligence, first responders, caregivers, and others. In doing so, “they” have power over how others perceive us and use our data, potentially building multiple black boxes containing intricate profiles—limited accounts of what makes us “us.” This technology is not free, and will not set us free.

Today, as in 2006, this strikes us as technology’s natural trajectory. From the moment the first programmable general purpose digital computer, the ENIAC, was dubbed “an electronic brain,” it was always going to fuse with the body at its ultimate technological potential.

The paradox of all this pervasive vigilance is that the more security we hope for, the less we get.

“Nothing was your own except the few cubic centimeters inside your skull,” Orwell ominously wrote in 1984. And yet uberveillance threatens that too: An embedded “smart” black box in the human body would encroach on a last fragment of private space. An internal closed-circuit television feed could bring about the most dehumanizing of prospects—a total loss of control and dignity, if used to surveil thoughts, rituals, habits, activities, appetites, urges, and movements. Such dystopian scenarios are no longer sci-fi imaginings alone.

This has ontological implications, directly to do with the nature of being. It could represent the consequential deconstruction of what it means to be human, to have agency, and to make choices for oneself. If uberveillance is to expand and forge ahead on its current path, the scenarios are countless and potential consequences staggering. At that point, we will have surrendered more than just our privacy.

Send A Letter To the Editors